Acadlore takes over the publication of IJTDI from 2025 Vol. 9, No. 4. The preceding volumes were published under a CC BY 4.0 license by the previous owner, and displayed here as agreed between Acadlore and the previous owner. ✯ : This issue/volume is not published by Acadlore.

Image Enhancement Technique Utilizing YOLO Model for Automatic Number Plate Recognition

Abstract:

The significant increase in Indonesian vehicle numbers has highlighted the importance of a robust ANPR system. Over the past few years, the number has increased by up to 4% each year and is expected to continue rising as long as economic growth continues. This study utilized the YOLOv9 model and EasyOCR along with image enhancement as a pipeline for the license plate recognition process. YOLOv9 was chosen for object detection because its architecture offers good stability in terms of performance and efficiency, even outperforming newer models with a 99.3% mAP@50 in the YOLOv9s variant. EasyOCR was used to recognize and extract characters from license plates. To enhance image quality, Real-ESRGAN upscales image resolution using a GAN architecture and addresses character blurring caused by low-resolution images. Additionally, CLAHE further enhances the clarity of low-contrast characters by employing a histogram to redistribute image intensities. The proposed method achieved an accuracy of 84.36% when tested on 100 image samples in real-world situations, indicating fairly good performance despite challenges like blurring and low contrast. The results highlight the potential of ANPR solutions in addressing the common challenges of real-time license plate recognition, contributing to more efficient traffic surveillance and enforcement systems, especially in Indonesia.

1. Introduction

Indonesia’s vehicle count has been rising steadily, driven by the country’s economic growth and growing population needs. As one of the world’s most populous nations, Indonesia faces substantial challenges in managing traffic. Data from the Central Bureau of Statistics highlights this upward trend, with vehicle numbers climbing consistently year after year. In 2022, there were 125,305,332 motorcycles in Indonesia, with an annual growth rate of 4.11%, while the number of cars increased by 3.73% to 17,168,862 units [1].

A vehicle license plate is an important thing for every vehicle user who drives on public highways must have. Each vehicle has a unique license plate number as an identifier for traffic surveillance and law enforcement purposes. The high accidents, vehicle theft, and traffic violations in Indonesia makes it difficult for law enforcement to conduct manual surveillance [2, 3]. This emphasizes how important automated traffic surveillance systems are in ensuring more effective law enforcement. Nowadays, Electronic Traffic Law Enforcement (ETLE) is an innovative automated traffic surveillance technology that can reduce the manual law enforcement. This technology was first introduced to Indonesia in 2018 and can automatically recognize vehicle license plates through Automatic Number Plate Recognition (ANPR), which can improve the efficiency of traffic surveillance [4, 5].

Automating number plate recognition systems could be possible with advances in artificial intelligence and computer vision. An object detection model called YOLO (You Only Look Once) can be used to detect license plates on Indonesian vehicles. Furthermore, optical character recognition (OCR) can be utilized to recognize and extract characters from license plates. However, real-world challenges such as Indonesia’s diverse weather conditions, different license plate angles, and the wide variety of Indonesian license plate styles can lead to recognition issues with this technology.

One approach to addressing these problems is to apply several image-enhancement techniques [6]. Real-ESRGAN and CLAHE are two techniques for improving visual image quality. Real-ESRGAN elevates image resolution, and CLAHE adjusts the image contrast, helping to reduce character recognition errors and making OCR more accurate. This study investigates the implementation of YOLOv9 to detect Indonesian vehicle license plates, as well as the application of image quality enhancement methods, and to evaluate how this approach may increase the accuracy of EasyOCR, especially in real-world conditions.

2. Related Work

YOLO’s primary objective is to instantly locate and identify objects in real time. This object detection model uses a single neural network, which makes it fast and effective. In Figure 1, YOLO divides the image into S×S grids [7]. Eq. (1) is used to calculate a confidence score , which predicts the presence of objects in each grid cell [8]. Based on the probability values from the class probability map, cells containing object parts are combined into a unified bounding box per detected object. The YOLO approach uses non-maximum suppression, eliminating duplicates while minimizing bounding box mistakes [9].

The intersection over Union metric measures how closely the predicted bounding box matches the actual location of an object in the image. This metric divides the overlapping area between the predicted and actual boxes (A∩B) by their total area (A∪B). Figure 2 shows the varying IoU values based on the extent of overlap between both boxes, a higher IoU value indicates that the prediction better matches the actual location of the object [9]. For example, a low IoU value of 0.33 indicates minimal overlap between the predicted and actual boxes, whereas a nearly perfect overlap is represented by a high IoU of 0.95.

The implementation of YOLO for license plate detection in ANPR systems has shown excellent performance in recent years. YOLOv8 was successfully utilized by Sarhan et al. [10] to detect Egyptian license plates in real time. Furthermore, Tran-Anh et al. [11] confirmed that YOLOv8 is effective in detecting license plates from a variety of camera angles. Compared to earlier YOLO models like YOLOv8, the deep learning-based object detection methods in YOLOv9 or YOLOv10 have been shown to be more accurate and effective in real-time detection of objects, making them expected to play a crucial role in the real-time detection [12, 13].

YOLOv9 advances its architecture with two key innovations that enhance performance and efficiency. First, Programmable Gradient Information (PGI) addresses the issue of deep supervision. This issue often creates information bottlenecks that are incompatible with lightweight neural network architectures. The bottlenecks can lead to data loss during transformations in deep neural networks, resulting in unreliable gradients that has a negative impact on the object detection performance. PGI enables the deep neural network to generate more reliable gradients and is appropriate for implementation in lightweight architectures. Second, Generalized Efficient Layer Aggregation Network (GELAN) architecture combines CPSNet with ELAN to improve the stability and performance of the model [14, 15].

Optical character recognition (OCR) is a technology that detects and transforms text from images into readable digital text. OCR uses advanced artificial intelligence and image processing technology to extract characters or text from images. EasyOCR was produced by Jaided AI (2020), which is a popular OCR tool [16]. EasyOCR is well-regarded for being user-friendly and delivering impressive results in extracting text from images. It combines the CRAFT method for detecting text and the CRNN for recognizing individual characters [17, 18]. The CRAFT method uses a Regional Proposal Network (RPN) to detect text regions and a Character Proposal Network (CPN) to improve accuracy by predicting bounding boxes around the text. ResNet and LSTM networks are used to extract features from text, allowing EasyOCR to recognize characters in images [10]. The findings of Vedhaviyassh et al. [19] show that EasyOCR is highly effective for real-time vehicle license plate detection, outperforming Google's TesseractOCR.

Image enhancement is a method for improving and adjusting the visual quality of images. The quality of input images for OCR in real-time Indonesian license plate detection varies according to the environment. Blurry images, high noise levels, and uneven contrast are the leading factors of inaccurate OCR predictions. Researches by Kabiraj [20] and Wang et al. [21] have demonstrated that enhancing image resolution and contrast yields impressive results in improving the accuracy of OCR.

Enhanced Super-Resolution Generative Adversarial Networks (ESRGAN) builds upon SRGAN, which utilizes a GAN architecture where the generator and discriminator work concurrently to significantly enhance image resolution. The discriminator evaluates the high-resolution images produced by the generator. The ESRGAN generator comprises multiple Residual-in-Residual Dense Blocks (RRDBs) that are built up from Dense Block layers. As shown in Figure 3, Dense Blocks use convolutional layers and Leaky ReLU activations to enhance the flow of information and gradients, help prevent vanishing gradient issues and allow the construction of deeper networks [22]. The generator then upscales the image using a series of upsampling layers, producing a high-resolution output image. This architecture transforms low-resolution images into high-resolution ones, resulting in smooth textures and detailed image quality.

Real-ESRGAN is a version of ESRGAN that keeps the same generator architecture but is optimized to enhance image resolution in real-world conditions. This version extends the original ×4 ESRGAN architecture to scale factors of ×2 and ×1, adapting more effectively to handle a wider range of image resolutions. Consequently, the architecture can become a heavy network, and pixel unshuffle must be employed at the new scale factors to reduce spatial dimensions and expand the number of channels. Furthermore, Real-ESRGAN applies an advanced degradation model to convert high-resolution images into synthetic data (see Figure 4) [23]. The degradation model allows the training phase to include more diverse data that reflects real-world conditions, in order to address its challenges. The complexity of its degradation model causes the discriminator architecture used in ESRGAN to be ineffective, so Real-ESRGAN employs a U-Net structure with spectral normalization to effectively manage this problem. The U-Net structure includes a skip connection mechanism, allowing it to generate reliability scores for each image pixel and provide them to the generator [24]. The spectral normalization regularization applied to the U-Net structure improves stability during the training process. This regularization method keeps the discriminator from becoming too sensitive to variations in the input based on real-world noise and artifacts introduced during degradation [25]. As shown in Figure 5, spectral normalization and U-Net’s hierarchical feature extraction allow Real-ESRGAN to effectively handle different distortions while ensuring a stable training process [23]. The mechanism generates high-resolution images with significant improvement, even in challenging real-world conditions.

Contrast Limited Adaptive Histogram Equalization (CLAHE) enhances image contrast, particularly in low-contrast images, by employing histogram equalization [26]. The histogram maps the distribution of pixel intensities in the image. As suggested by its name, CLAHE uses a limiting mechanism called the clip limit to restrict the maximum pixel intensity. If any intensity value exceeds the clip limit, it is equalized to remain within the limit. Dewi et al. [27] demonstrated the good performance of CLAHE in enhancing contrast in low-contrast images compared to other contrast enhancement techniques. Similarly, Chang et al. [28] found comparable results, even though CLAHE does not always outperform other techniques in all situations.

Figure 6 shows the steps for doing histogram adjustment with CLAHE, which are as follows [28, 29]: (1) Split the input image into small blocks; (2) Create a histogram for each block and limit the maximum pixel intensity using a clip limit; (3) Redistribute the pixel values that exceed the limit evenly to the lower part of the histogram; (4) Equalize the histogram to adjust the intensity distribution of grayscale levels within each block. Each pixel intensity within a block has a corresponding probability, which can be determined using the Probability Density Function (PDF) as follows:

where, j denotes the intensity level in a grayscale image, where (or 255) is the maximum value. represents the number of pixels at intensity level j, and n refers to the total number of pixels within the block. Based on PDF(j), CLAHE uses histogram equalization to adjust the pixel intensities within each block. This process redistributes the intensity values, making sure that every image block has a more consistent intensity distribution, thereby enhancing the overall contrast. The distribution is then calculated using the Cumulative Distribution Function (CDF) as follows:

Then, the gray levels of the image blocks can be remapped using the following equation:

$\mathrm{S}_{\mathrm{k}}$ is the output of the remapping function $\mathrm{T}(\mathrm{k})$ that determines the new pixel intensity value based on $\operatorname{CDF}(\mathrm{k})$, where, k is the pixel value in the gray intensity level.

Bilinear interpolation in CLAHE prevents blocking artifacts introduced during the remapping process. This method interpolates new pixel intensity values based on the grayscale values of surrounding pixels. If the grayscale value at sample point P is k , and the interpolated pixel value is $\mathrm{k}^{\prime}$, the sample point is surrounded by the points $\mathrm{P}_{\mathrm{a}}, \mathrm{P}_{\mathrm{b}}, \mathrm{P}_{\mathrm{c}}$, and $P_d$. The corresponding remapping of the grayscale levels from k is denoted as $\mathrm{S}_{\mathrm{P}_{\mathrm{a}}}(\mathrm{k}), \mathrm{S}_{\mathrm{P}_{\mathrm{b}}}(\mathrm{k}), \mathrm{S}_{\mathrm{P}_{\mathrm{c}}}(\mathrm{k})$, and $\mathrm{S}_{\mathrm{P}_{\mathrm{d}}}(\mathrm{k})$. The interpolated pixel value at the grayscale level $\mathrm{k}^{\prime}$ can be calculated from the following equation:

where, $\alpha$ and $\beta$ are the normalized distances with respect to the point $\mathrm{P}_{\mathrm{a}}$.

3. Methodology

In this study, we utilize the YOLOv9 model to detect Indonesian vehicle license plates and employ EasyOCR to extract the characters from them. Both YOLOv9 and EasyOCR are known for their good performance within the scope of ANPR technology. YOLOv9 is a state-of-the-art model for real-time object detection, while EasyOCR offers outstanding accuracy and ease of use in recognizing alphanumeric characters. The performance of EasyOCR is further improved by the application of image enhancement techniques, including Real-ESRGAN and CLAHE. This combination makes the system both reliable and efficient. The overall workflow of this research is illustrated in Figure 7.

YOLOv9 is ideal for license plate real-time detection with high accuracy and efficiency in detecting small objects like license plates, which makes it well-suited for this task. The cropping process begins when YOLOv9 detects a license plate, extracting the bounding box area for further processing to obtain a Region of Interest (ROI) [30]. This step isolates the license plate from the rest of the image, not only to take the relevant part of the image but also to reduce the computational load. Consequently, it will improve OCR's ability in detecting and recognizing characters on the license plates. The YOLOv9 model is trained and evaluated to ensure that it operates with high detection accuracy.

The training process is intended to teach the YOLOv9 models about the characteristics of vehicle license plate objects, allowing them to improve their performance in detecting these objects. The training process begins with data preprocessing that resizes the images to a consistent size for maintaining consistency across the dataset. The training data also applies augmentation techniques, including random flipping, brightness adjustments, and scaling, to further improve the model's robustness and ability to handle varying conditions. We select the training hyperparameters based on the work of Singh et al. [31] and Amin et al. [32], whose studies highlighted an effective balance between accuracy and efficiency for license plate detection (see Table 1). Their findings serve as a foundation for optimizing our model's performance. The learning rate is selected in conjunction with the AdamW optimizer to ensure training stability while enabling efficient updates to the model's weights. The training batch size can be adjusted based on both GPU memory utilization and the benefits of gradient updates. The chosen epoch and weight decay help the training process to prevent overfitting. Image size 640 is a standardized size to balance the ability to capture image information while maintaining computational efficiency. The chosen momentum is a standardized value that also stabilizes the training process and ensures the model achieves a minimum loss faster. These hyperparameters are adjusted to help the model adapt more effectively to real-world conditions based on the characteristics of the license plate dataset. Figure 8 is an example of the ROI obtained with YOLOv9.

Hyperparameters | Value |

Learning rate | 0.01 |

Batch size | 16 |

Epochs | 50 |

Image size | 640 |

Optimizer | AdamW |

Momentum | 0.937 |

Weight decay | 0.005 |

The YOLOv9 models are evaluated to assess their performance in detecting license plates. The evaluation process involves a detailed examination of the models' ability to understand how well they perform. The evaluation utilizes standard metrics that provide insight, which are defined in Eqs. (6)-(8):

where, TP (True Positive) refers to the number of objects that are correctly detected by the model, and FP (False Positive) indicates the number of instances where an object is incorrectly detected as a license plate. Precision measures the proportion of correctly detected license plates among all instances that were labeled as license plates by the model. A higher precision value indicates that the detected license plates are truly correct.

where, FN (False Negative) refers to the number of objects that are present in the image but are missed or not detected by the model. Recall measures how effectively the model can detect every important object in the image, including those that might be challenging to detect. A high recall value indicates that the model successfully detects a large proportion of the true objects in the image.

where, represents the overall count of object categories, while denotes the Average Precision for each specific category . AP is a key metric that calculates the precision-recall curve for a category, then summarizes it into a single value. mAP calculates the mean AP across all categories at a given IoU threshold (mAP@50 refers to a minimum IoU of 50%).

Image enhancement will improve image quality after the ROI is obtained. This is important because the cropping process can reduce the image's visual quality, making it harder for OCR to accurately detect and recognize characters on the license plate. Real-ESRGAN and CLAHE are the primary techniques applied in this study to enhance the clarity and detail of license plate images. Real-ESRGAN enhances image resolution and sharpens the characters on the license plate, while CLAHE adjusts contrast distribution in the images to achieve more even contrast. Additional techniques, including deskewing and binarization, are also used to support this method.

Real-ESRGAN is the initial phase in our image enhancement process. In this work, we utilize a scaling factor of ×4 to upscale the resolution of license plate images. The upscaled resolution enhances the clarity of characters that were previously blurred in low-resolution images. Real-ESRGAN was chosen because it can improve the visual quality of real-world images without adding noise or artifacts, allowing even the most challenging license plate images to be processed correctly. This means that the technique can enhance image visuals for better recognition of small and intricate details that are essential for accurate OCR processing, while preserving important features.

Before deskewing, the enhanced images will first be resized again to 920×430 pixels using bilinear interpolation to maintain uniformity across all input images. Furthermore, the deskewing process begins by determining the image's tilt angle. The determined tilt angle is then applied to correct the skew, ensuring that it does not interfere with character detection and recognition. We use the Hough Line Transform, a method for identifying straight lines in images, to calculate the angle. This method calculates the distance between the point (0, 0) and the straight line, known as the normal line, using the following equation [33]:

where, $x$ and $y$ represent the Cartesian coordinates of the boundary point, and $\theta$ denotes the angle between the normal line and the x -axis. The Hough Line Transform computes a two-dimensional parameter space $(\theta, \rho)$ to find the dominant $\theta$ value. The image's tilt angle is determined by identifying the most frequent $\theta$ value from the mapped coordinates in the parameter space. This angle is then used to rotate the skewed license plate image relative to a defined central position using the two-dimensional rotation matrix [34]. The image's point $(\mathrm{x}, \mathrm{y})$ is rotated by an angle $\theta$ using Eq. (10), creating a new point $\left(x^{\prime}, y^{\prime}\right)$.

The local contrast of the vehicle license plate image is adjusted using Contrast Limited Adaptive Histogram Equalization (CLAHE), which divides the image into small 8×8 blocks. In this study, histogram equalization is applied to these blocks with an adaptive clip limit, calculated by the following equation:

where, $\alpha$ is the contrast control parameter, $\sigma_{\mathrm{i}}$ is the standard deviation of image $i$, and $c$ is a small constant to prevent division by zero. This adaptive method ensures more precise contrast adjustment according to the specific conditions of the image.

The value of $\alpha$ is set to 50 to adjust the image contrast. The CLAHE process is performed by separating the RGB image into three individual color channels: Red, Green, and Blue. Each color channel is then processed independently using CLAHE, aiming to adjust the local contrast of each channel. After that, the three-color channels are combined back together to form an RGB image that has been adjusted for contrast as the output.

Binarization simplifies the process of OCR character extraction from vehicle license plate images. Otsu’s binarization method automatically determines the optimal threshold value based on the gray level histogram by dividing it into two distinct classes: background C0 and object C1. Let the probability distribution value at gray level in this method be denoted as pi. The probabilities of class occurrence and the class mean levels are calculated as follows [35]:

and

where, $\omega_{\mathrm{C}_0}=\omega(\mathrm{k})$ is the cumulative distribution value at threshold $k$. The inter-class variance is then calculated as follows:

The optimal threshold value $k^*$ is obtained by maximizing $\sigma_{\mathrm{B}}^2(\mathrm{k})$, which is calculated using the following equation:

Otsu's binarization technique segments the image into foreground (character areas on the license plate) and background by evaluating the pixel values and the threshold $\mathrm{k}^*$. The input image $\mathrm{T}(\mathrm{X}, \mathrm{Y})$ is segmented based on the threshold $\mathrm{k}^*$ in the following manner [36]:

where, $\mathrm{G}(\mathrm{X}, \mathrm{Y})$ is a function that groups the pixel intensities from $\mathrm{T}(\mathrm{X}, \mathrm{Y})$ into two feature vectors. This function has the criterion that if the intensity value of the pixel $\mathrm{T}(\mathrm{X}, \mathrm{Y})$ in gray level is greater than or equal to the threshold $\mathrm{k}^*$, then that pixel is considered an object. In contrast, if the intensity value of the pixel is less than the threshold $\mathrm{k}^*$, then the pixel is considered part of the background.

After all previous processes are completed, EasyOCR is the final step in detecting and recognizing the characters on the license plate, converting them into digital text. The performance of EasyOCR is then evaluated using an accuracy metric to see the effect of each image enhancement technique applied to the license plate images. This evaluation examines how different image enhancement techniques, such as resolution upscaling and contrast adjustment can affect the accuracy of the character recognition process. The accuracy metric is calculated by comparing the predicted characters with the ground truth from the original image using Eq. (17).

where, $n_{\text {truth }}$ is the number of characters in the ground truth, and Levenshtein Distance LD is the minimum number of character changes required to transform the predicted text into the actual text. This includes additions, deletions, or substitutions of characters, allowing for an evaluation of the prediction accuracy [37].

The Indonesia LPR Dataset from Roboflow was created primarily for vehicle license plate recognition [38]. This dataset comprises a variety of images of Indonesian vehicles, including their license plates (see Figure 9). The images provided include variations in contrast conditions, viewpoints, and vehicle plate types. Furthermore, the images are taken in a variety of settings such as roads, parking lots, and public areas. To match with the research purposes, we limit our emphasis to the license plate class within this dataset. It contains a total of 7,913 images, divided into three categories: 6,203 for training, 846 for validation, and 864 for testing.

4. Results and Discussions

This section presents the results of implementing Indonesian license plate recognition in real time, utilizing YOLOv9 for license plate detection, and applying image enhancement methods to improve character recognition accuracy with EasyOCR. The evaluation of each method provides an overview of the performance of license plate recognition, especially in conditions that align with real-world scenarios.

The entire training process utilized P100 GPU computation available on the Kaggle platform. Three variations of the YOLOv9 model were used for training: YOLOv9s, YOLOv9m, and YOLOv9c, along with more recent object detection models from YOLOv10 and YOLO11, including YOLOv10s, YOLOv10m, YOLO11s, and YOLO11m. Figure 10 shows how effectively these models performed when the hyperparameters described in Table 1 were applied. The steadily decreasing loss values indicate the model got more accurate at detecting license plates. Metrics detailed in Section 3.1 were used to evaluate each model, and the results show that YOLOv9s achieved the highest mAP@50 values at 99.3% (see Table 2). This model has a better result not only in mAP@50, but also in precision and mAP50-95. Apart from that, the comparative analysis between YOLOv9, YOLOv10, and YOLO11 explains that the ‘small’ models have more balanced results in precision and mAP@50-95 compared to larger models, such as the ‘medium’ models. These results suggest how well the smaller models perform under different conditions that might appear in ANPR tasks, especially in Indonesian areas. Smaller models also offer more computational efficiency. It is really ideal for real-time tasks that require both fast processing and high accuracy.

Model | P | R | mAP@50 | mAP@50-95 |

YOLOv9s | 0.988 | 0.974 | 0.993 | 0.753 |

YOLOv9m | 0.984 | 0.976 | 0.988 | 0.744 |

YOLOv9c | 0.985 | 0.965 | 0.988 | 0.746 |

YOLOv10s | 0.985 | 0.952 | 0.987 | 0.744 |

YOLOv10m | 0.973 | 0.956 | 0.988 | 0.74 |

YOLO11s | 0.982 | 0.967 | 0.987 | 0.741 |

YOLO11m | 0.974 | 0.976 | 0.989 | 0.735 |

The EasyOCR evaluation was conducted by taking 100 sample images of Indonesian vehicles. YOLOv9s was applied to each sample image to detect license plate objects and obtain the ROI. Next, the visual quality of the ROI image is enhanced with the image enhancement methods described in Section 3.2, as shown in Figure 11. First, Real-ESRGAN mitigates the impact of low-resolution surveillance cameras and motion blur, which is often caused by fast-moving vehicles. The blurring effect on characters can be significantly reduced for better readability by enhancing image resolution. Secondly, CLAHE specifically handles underexposed license plate objects at night or in other low-contrast situations. Furthermore, deskewing and binarization are used as the support techniques. Deskewing handles skewed license plate that might happen while implementing real-time recognition. This technique can be considered an important step because OCR systems generally read characters in a straight line. Finally, binarization is used to separate characters from the background part. In summary, these enhancement techniques can make characters on the license plates more legible and improve the overall accuracy of the following character recognition process.

The evaluation results in Table 3 clearly demonstrate an improvement in accuracy as each enhancement method is applied. According to the evaluation results of 100 tested samples, EasyOCR can achieve the highest average accuracy of 84.36%, while deskewing and binarization alone only achieve an average accuracy of 49.76%. Real-ESRGAN greatly increased the average accuracy of EasyOCR by enhancing the details of license plates in low-resolution images, indicating this technique is effectively refining characters in the license plate area in real-world scenarios, while some low-contrast image samples were effectively adjusted using CLAHE technique. This combination can significantly improve the accuracy of character detection and recognition by EasyOCR (see Figure 12).

Methods | Average Accuracy |

Only Deskewing and Binarization | 49.76% |

Real-ESRGAN | 78.67% |

Real-ESRGAN+CLAHE | 84.36% |

Although EasyOCR can achieve relatively high accuracy with the proposed method, there are some limitations that need to be discussed. The proposed method focuses on detecting and recognizing Indonesian vehicle license plates under Indonesian regional conditions. The further adjustment is needed to perform optimally in regions with significantly different license plate designs. However, it remains an unresolved challenge where the vehicle license plates are physically damaged, including cracks, scratches, or degraded. The current method relies solely on improving visual clarity, and cannot address physical damage factors. Apart from that, Table 4 highlights the limitation of YOLOv9 without image enhancement techniques, which struggles to detect license plate objects in a low-contrast condition. This implies that the application of several image enhancement techniques is also important in the detection process. Table 4 also highlights the limitation of applied EasyOCR, which frequently misrecognizes certain characters on Indonesian vehicle license plates. For example, characters such as ‘Q’ are frequently mistaken for ‘O’, ‘O’ for ‘0’, and ‘G’ for ‘6’. These errors are generally caused by the visual similarities between the characters. Additional training to fine-tune EasyOCR using a dataset specifically composed of Indonesian license plate characters could improve its ability to distinguish them.

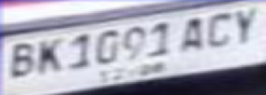

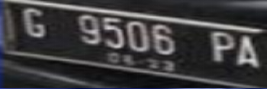

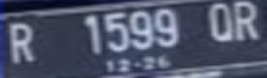

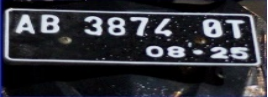

License Plate Images (ROI) | Image Size | Detected by YOLOv9 | Ground Truth | EasyOCR Prediction | Accuracy |

| 71×45 | Yes | BK1091ACY | BK1091ACY | 100.0% |

| 83×70 | Yes | G9506PA | 69506PA | 85.71% |

| 69×35 | Yes | R1599QR | R1599OR | 85.71% |

| 658×356 | Yes | AB3874OT | AB38720T | 75.0% |

| 53×39 | Yes | AB2833SZ | B2833 | 62.5% |

| 53×39 | Yes | B888SML | 6886SHL | 57.14% |

| 640×640 | No | BH3485CI | - | 0% |

5. Conclusions

The rising number of vehicles in Indonesia has emphasized the need for automated traffic surveillance systems to support law enforcement. Various methods from previous studies have been proposed to improve their performance. As one of them, the integration of Real-ESRGAN and CLAHE with YOLOv9 and EasyOCR has demonstrated a strong performance improvement in ANPR implementations. YOLOv9s outperformed other models by achieving the highest mAP@50 value of 99.3%, along with high precision and mAP50-95 scores. The smaller models offer a more balanced trade-off between accuracy and computational efficiency. This makes them well-suited for real-time ANPR applications in Indonesia. Furthermore, EasyOCR's accuracy in detecting Indonesian vehicle license plates has improved with the implementation of YOLOv9 and image enhancement techniques. Based on the 100 samples, EasyOCR was able to achieve the highest average accuracy of 84.36% with the proposed method. This means that the combination of Real-ESRGAN and CLAHE was able to address common challenges in real-world ANPR by reducing the effects of low resolution, blurring, and poor contrast.

However, extremely low-contrast visuals are still a challenge for YOLOv9 and EasyOCR. Image enhancement should be applied before the object detection step to guarantee that license plate objects can be detected even in low-quality images. One method to consider is automatic CLAHE with dual gamma correction. This method combines CLAHE with a two-level gamma correction technique and strict parameter control. The first gamma increases the overall image block brightness, whereas the second gamma adjusts the contrast in the darker areas. It is used to adaptively enhance contrast with strong dark shadows in each image block. Other techniques like denoising and sharpening could also make the characters on the license plate more clearly visible. Future studies will investigate additional image enhancing methodologies, including noise reduction approaches, to increase the quality of license plate images.

This paper is supported by the National Science and Technology Council, Taiwan (Grant No.: MOST-111-2637-H-324-001-). This research is partially supported by the Vice-Rector of Research, Innovation, and Entrepreneurship at Satya Wacana Christian University.