Gait Based Person Identification Using Deep Learning Model of Generative Adversarial Network

Abstract:

The proliferation of digital age security tools is often attributed to the rise of visual surveillance. Since an individual's gait is highly indicative of their identity, it is becoming an increasingly popular biometric modality for use in autonomous visual surveillance and monitoring. There are various steps used in gait recognition frameworks such as segmentation, feature extraction, feature learning and similarity measurement. These steps are mutually independent with each part fixed, which results in a suboptimal performance in a challenging condition. It can be done independently of the users' involvement. Low-resolution video and straightforward instrumentation can verify an individual's identity, making impersonation a rarity. Using the benefits of the Generative Adversarial Network (GAN), this investigation tackles the problem of unevenly distributed unlabeled data with infrequently performed tasks. To estimate the data circulation in various circumstances using constrained observed gait data, a multimodal generator is applied here. When it comes to sharing knowledge, the variety provided by the data generated by a multimodal generator is hard to beat. The capability to distinguish gait activities with varying patterns due to environmental dynamics is enhanced by this multimodal generator. This system is more stable than other gait-based recognition methods because it can process data that is not equally dispersed throughout a different environment. The system's reliability is enhanced by the multimodal generator's capacity to produce a wide variety of outputs. The testing results show that this algorithm is superior to other gait-based recognition methods because it can adapt to changing environments.

1. Introduction

To identify individuals based on how they walk is the goal of gait recognition, a biometrics application [1]. This paper defines gait recognition as the challenge of determining which individuals can be identified in a set of gait sequences collected by visual cameras. Gait biometrics have the distinct benefit of being practical for long-distance human identification [2]. In other words, low-resolution gait analysis is feasible. Human mobility is described by gait, which encapsulates both its spatial statics and its temporal dynamics. Unlike other biostatistics, gait biometrics can be used to identify people from a distance. Another benefit of using gait recognition is that it does not rely as largely on the subjects' active cooperation as other biometrics do. Gait recognition has proven to be superior in emergency settings like the recent COVID-19 pandemic [3], when surveillance systems failed. There are growing hopes that it will soon be able to guarantee the safety of the general population.

Although studies on gait recognition only began a short time ago [4], they have been actively promoting and expanding the area ever since. We may roughly categorize the history of gait recognition study into three phases. Beginning in the early 1990s [5], the first phase set out to test whether or not remote human identification was possible. Current gait analysis methods have shown reasonable performance; however these methods have only been tested on benchmarks with as few as ten participants [6]. Subsequently, DARPA's Human ID at a Distance (HumanID) [7], [8], [9] programme promoted not only methodologies but also datasets in its second stage. Several template-based methods [10], [11], [12] stand out as the most common automatic recognition methods nowadays. On the other hand, a different class of methods represented pedestrians' structural and kinetic properties using coarse human-model techniques [13], [14], [15]. Over a hundred people and various critical aspects, including view variants and appearance-changing, were considered in the early stages of dataset building the encouraging consequences of the test, it appears that gait recognition is doable and could be useful in the future [16].

At that point, gait recognition studies entered the era of deep learning. Unlike traditional systems relying on hand-crafted features, such as many template-based methods, deep era recognition methods may capture complicated motion features immediately from sequential inputs [17]. Thanks to the advent of deep learning methods, gait detection has shown impressive results on a variety of benchmarks. Gait recognition in increasingly complex settings, such as acknowledgement in a dataset with over ten thousand participants and robust gratitude in the wild, is also a focus of deep era research [18].

The major contributions of deep learning algorithms have led to considerable increases in recognition performance, and the results on the most common benchmarks demonstrate the viability of gait credit as a potent instrument for public safety [19], [20]. The total recognition accuracy on CASIA-B is greater than 93% even in the most challenging appearance-changing environment within the era of deep gait recognition. More than 10,000 participants were delivered to deep gait models as part of the study's recognition on a bigger participant, with the models achieving 97.5% rank-1 correctness on the OUMVLP dataset. To address this problem in a natural environment, GREW and Gait3D set out to investigate large-scale gait identification in the wild, with Gait3D additionally providing 3D explanation to investigate model-based applications. Surprisingly, the HID2022 competition achieved even higher than 95.9% on the setting, with people occasionally pausing to take a closer look.

In this study, we present a gait-based recognition system that, among other things, functions more reliably and consistently in a variety of circumstances. A generator, a translating generator, and a classifier make up the proposed model. Labeled data from the training atmosphere setting are all that is needed to train the system. Some events, like falling down, may not generate any data since they are not routine. In order to solve these issues, the boosting generator creates a significant amount of synthetic, unlabeled data to swell the available datasets in the target domain. The generator of the translation performs a "domain transfer," moving control from the original domain to the new one. Information from the original domain, when translated, displays properties not dissimilar to those in the target domain. In a variety of conditions, this ensures the system's continued reliability. The experimental results show that the system performs well in a dynamic environment and outperforms the systems that were compared to it. Section 2 provides a summary of literature, while Section 3 details the methods being projected. In Section 4, the validation investigation is presented, followed by a summary in Section 5.

2. Related Works

Many different approaches and gait models have been investigated for their potential in extracting useful features from the recorded skeletons for pathological gait analysis. To classify data, traditional approaches require manually selecting features of important clinical relevance. So, to aid in the diagnosis of various disorders, several gait models with predetermined properties are presented. They have inherent limitations due to the fact that the preselected features often fail to adequately capture a gait description and may overlook important details in the abstract data. As DNNs have progressed.

Through the use of a convolutional neural network, Sarin et al. [21] offer a touchless multimodal person recognition model that combines gait and speech modalities. For each modality, a unique pipeline was built using convolutional neural networks. This article also investigates many fusion approaches for integrating the two pipelines, illustrating the impact that each approach has on a number of different measures. Weighted regular and product fusion algorithms perform the best for the experimental data.

The purpose of the work of Merlin Linda et al. [22] is to present a smart recognition scheme for viewpoint differences in gait and speech. This paper presents a capsule network (CNN-CapsNet) model for recognising gait and voice changes and details the system's performance. Due to translational invariances in subsampling and speech fluctuations, the gait characteristic relative spatial hierarchies in the image entities are the main focus of the suggested intelligent system. The suggested work known as CNN-CapsNet is able to automatically train spatial information and by adapting to equal variances regardless of the viewpoint used. The proposed research would use a CNN-CapsNet model to settle disagreements about cofactors and gait recognition. To detect walking marks in surveillance videos, multimodal fusion techniques utilising hardware sensor strategies are preferable to the suggested CNN-CapsNet, which has several limitations. It can also serve as a prerequisite instrument for studying, recognising, detecting, and confirming malware procedures. Recently, (GCNN) has been used in gait investigation research [23] since it is a more advanced form. By zeroing in on the relationships between highly linked nodes, graph convolution is able to accurately extract spatial information from human skeletons.

Modeling and forecasting time-series signals is a specialty of the recurrent neural network (RNN), which works particularly well with signals of variable lengths. Gait data is one area where this method has been put to use. For the purpose of online gait phase estimation using input joint angle and position data, Kidziński et al. [24] opted for a long short-term memory (LSTM) system.

A gait anomaly acknowledgement is proposed by Sadeghzadehyazdi et al. [25], which makes use of Kinect skeleton data to capture spatiotemporal patterns. The suggested model represents the interdependence of various body joints during locomotion by taking the skeleton as a whole into account. The proposed model takes into account a multi-class framework, as opposed to the standard two-class or single-class approaches used in skeleton-based systems. A multi-class approach like this can be easily modified for use in settings other than motion capture labs, allowing for more frequent and cheaper gait evaluations. In order to detect nine distinct gaits, The publicly accessible Walking gait dataset is used to train and test the suggested deep learning model, which has a regular accuracy of 90.57%. Using transfer learning, the validation process examines the model's performance on two more publicly available datasets. The model achieved an average accuracy of 83.64% on one dataset with three classes and 90.83% on another with six classes of normal/pathological gait patterns. This work demonstrates the promise of marker less modalities like Kinect for the development of more efficient and cost-effective health infrastructures for ageing in place.

Using LSTM and the inertial sensors built into modern cell phones, Zou et al. [26] accomplished recognition in the wild. Due to its folded design, the RNN is more complicated and time-consuming to train than the CNN. Even while promising results have been shown in recent research on further improvement or the combination of RNN and CNN [26], their work is not suited for generalisation across other subjects.

Generative methods. The initial application of GAN was to normalise variations in gait photographs [27]. To further enhance generative approaches, Yu et al. [28] developed a multi-loss strategy, with the goal of cumulative while decreasing the intra-class variation. In order to learn view-specific features, He et al. [29] created a Multi-task Generative Adversarial Network (MGAN). To extract view-invariant features, (DiGGAN) [30] framework was presented. To separate gait features from appearance features, Zhang et al. [31] designed a system.

Training the system requires labelled data from the training environment (referred to as the original environment or source domain) and unlabelled data from users in the new environment (referred to as the test environment or target domain). Asking users to collect large amounts of data of all activities in the new ecosystem is not user-friendly, so only small amounts of data Users can perform actions randomly. Some non-regular activities such as falling were not reported. To solve the problems, a boosting generator is used to generate large amounts of pseudo-unlabelled data to increase the amount of data in the target domain. We add a small loss in the incentive generator loss function, which is useful in situations where data collected from users is not uniformly distributed among different functions. A translation generator translates a domain from a source domain to a target domain.

3. Proposed System

As can be seen in Figure 1, there are a total of three components to the system: two generators and a categorization model. An electric booster is the first component. It is trained to provide artificial gait data that closely mimics actual gait data from the target field during the boosting generator training segment. The boosting generator is used to create additional artificial data that lacks labels because there is a limit to the amount of unlabelled data that can be obtained from the target domain before training the system. The automatic translation system comes next. The original domain's labelled gait data are all translated into the new domain. Target domain activity labels can be applied to the translated outputs because the variety of actions does not change during translation. The translation generator might help fill in any gaps if any actions taking place in the target domain are overlooked while collecting data. The distribution of gait data in the goal domain is more closely approximated. At last, a classification model is developed using both the real gait data two areas and the fake gait data from the two producers.

Let's pretend we have two collections of real data, one branded (Xl, Y) and one unlabeled (Xu). The "Xl, Y" data originates from the "source domain," or the original training environment, whereas the "Xu" data comes from the "target domain," or the new environment. The ground-truth label for the activity is y, and Xl is the gait sample from Xl. The xu notation designates the Xu gait sample. It is possible to train the boosting generator G bo to produce unlabeled false gait data xfu that is identical to xu. A discriminator D is utilised to tell the difference between real and created gait samples, and the loss of these distinctions is then propagated back to the boosting generator during training. The generator has been fine-tuned to produce the fewest possible deviations between generated and real samples.

Due to the fact that some tasks are not routinely carried out by users, the distribution of the unlabeled data composed from the target field may not be consistent [32]. For the sake of the system's ability to thoroughly examine the aspects of gait data for all activities, we recommend that the generated data be spread more consistently. To this end, we craft the marginal loss $\mathcal{L}_{m}$, several activities, for training the increasing generator. The Shannon entropy of a sample drawn from a specific distribution is the expected value of the information term that sample. If there is no discernible pattern in the distribution of classes for a given element, then its classification is unknown with certainty. In other words, we can coerce the model into making the gait examples generated in batches more evenly distributed by increasing their entropy. This can be done by increasing the resulting gait data's Shannon entropy, which is denoted in Eq. (1).

where, H stands for the Shannon entropy, p for the class distribution, M for the number of generated data, I for the index of generated data, and y for the output class. As a result, it is possible to maximise the marginal loss and find a more unvarying deliver y of output data. Entropy loss from trying to minimise the gap among the actual and simulated data is used to determine the boosting generator loss $\mathcal{L}_{{G}_{bo}}$.

where, the marginal loss is represented by λ and the entropy loss by $\varepsilon$, respectively. In order to fabricate information in the desired domain, we can employ the generator.

The translator is split into a decoder (G). It's what gets used when you need to get gait data out of one domain encoder (E) and into another. The gait data in the source domain and the target domain are randomly sampled and then translated into each other to train the translation generator. The discriminator separates translated outputs from data from the target domain. To reduce the distributional divergence, adversarial training can be applied to the translation generator.

By utilising style transfer approaches, a new domain transferring procedure matrix is provided, which gives the translation generator a multimodal structure. In order to introduce variety into the transfer procedure, the style matrix is simulated at random. Styles in transferred images can vary depending on the style matrix used. Our translation generator mimics environmental dynamics that could impact gait data by generating an interference matrix at random. The gait data of one activity can be translated from the source area into the target domain with alternative meddling matrices using the translation generator. This allows us to mimic the gait data of this activity while taking into account the various environmental dynamics present in the intended domain. Since this interference matrix introduces randomness, gait data can be transformed in several ways across domains. The model benefits from the diversity of translated gait data by increasing its understanding of gait data in a variety of contexts and environmental dynamics. Two gait samples, x1 and x2, one from the source domain and the other from the target domain, are taken into consideration. The dynamic interference matrix between the gait and the environment is designated as sj.

Prior to its translation into the target domain, x1 is encoded with encoder E. (c, s1) = E(x1). The second interference matrix, $s_2$, is generated by the generator at random. The translated information, $x_1=G_1\left(c, s_1\right)$ and $x_2=G_2\left(c, s_2\right)$, is obtained by recombining the original data with c, which was taken from x1. Then, we'll utilise a discriminator D to tell the created sample apart from data in the target field. We reduce the translation loss $\mathcal{L}_t$ in the following way to get optimal generator performance:

Dissimilar s2 allow the translation generator to provide unique translated productions with the same c. Therefore, we can generate a wide variety of false data in another domain using data from the first domain, each of which corresponds to a unique form of environmental interference. A better estimate of the data distribution in the target domain may be obtained, and the system's adaptability to different types of environmental dynamics can be improved. Our approach employs a translation generator with a multimodal structure, rather than a deterministic one. Coding generated gait data with decoder G requires the use of a simulated interference matrix with a random coefficient distribution. This adds variety to the process of translation. While the gait data is same in structure, it may have various characteristics depending on the interference matrix used in translation. More data, with more varied features, can be created with the aid of the multimodal structure and used to train the system. As a result, the system will be more stable when subjected to varying environmental dynamics.

The technology is able to do a translation in reverse, which aids in driving the convergence. To encode the expression $x_{1 \rightarrow 2}, c^*, s_2^*=E_2\left(x_{1 \rightarrow 2}\right)$, where c* and s 2* are the afresh interference matrix, respectively, we make use of an encoder. The previously extracted s1 is recombined with c*. Similarity among the combined data and the original x1 is what the reconstruction loss measures. This reconstruction loss aids in the unification of content code c across domains. The $\mathcal{L}_R$ loss is the reconstruction loss.

The overall translation generator loss is calculated by adding the reconstruction loss.

where, is the renovation loss coefficient. After the conversion generator has been trained, we convert the remaining source-domain data to the target-domain format. The labels y from the original domain can be inherited by the translated data set xfl, yielding the inherited label y0. Furthermore, we can construct a wide variety of xfl by employing a variety of s2. Our classifier can be trained using all of these labelled synthetic data.

A CNN is used to extract, and a fully connected layer is used as the classifier in this model. In order to solve classification challenges, CNN is commonly employed. As its feature extractors can efficiently learn pertinent characteristics for high dimensional data, it lowers the necessity for expert knowledge. To classify data, we use a model with three convolutional layers C(nk nk; n fm), The classifier is built with these last three layers, which have full connectivity between them. The abbreviated notation for the model's structure is: The formula goes as follows: $C(5 \times 5 ; 32) \downarrow P \rightarrow C(5 \times 5 ; 128) \rightarrow P \rightarrow C(5 \times 5 ; 128) \rightarrow P \rightarrow F \rightarrow F \rightarrow F$. Use leaky rectified linear units (leaky ReLus) in this case. The classifier must divide the genuine data (xl and xu) and the boosting generator's fake data xfl into $(n+1) k+1$ categories, where k is the sum of activities we wish to categorise. There are k classes in total; the first k are the genuine data classes (1,...,k), the next k are the fake data classes last class is the unlabeled fake data from the generator. Generally speaking, here is how the objective function $\mathcal{L}_o$ looks:

where, y and y' are labels from the original domain and the translated domain, correspondingly, and an is the index of data sets produced by the translation generator. Each phrase in L c has a weight, meant by w1, w2, and w3, that is determined by validation. In the first term, we look at the first k classes to see if xl is in the right one. The second step is to make sure that xu falls into one of the valid data types (1, 2, ..., k). Crucial information is found in the third term. Due to the fact that the translation generator produced n unique outputs, it is necessary to properly categorise the translated data inside each group. As a result of the numerous possible dynamic changes, the system is provided with additional info of conceivable CSI data for one class in various environmental circumstances. A more diverse and well-balanced set of data is used to train the classifier, rather than only one set of deterministic translated outputs. This safeguards against inadequate training owing to a lack of data and boosts the system's ability to deal with a constantly shifting, unpredictable environment.

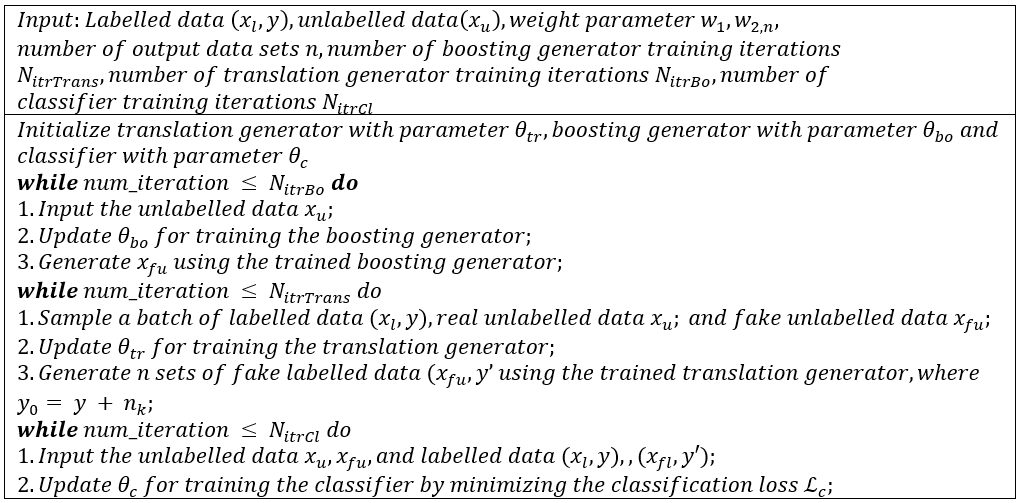

A brief overview of the model-training process is provided here. First, as illustrated in Algorithm 1, train the boosting generator using the unlabeled statistics collected from the target environment. As a next step, we employ the trained to fabricate unlabeled data with a more consistent distribution. The translation generator learns to convert data from the source domain to the target domain by combining data from both domains. Once the translation generator has been trained, any data in the source domain can be automatically converted into the desired format in the target field. These translated products may retain the labels assigned to them in the original domain. The classification system is then trained using $x_u, x_{f u},\left(x_l y\right)$ and $\left(x_{f l}, y^{\prime}\right)$.

Algorithm 1. Training Phase of proposed model

4. Results and Discussion

There are 124 people in CASIA-B [33]. Each subject has 110 arrangements total across 11 views (0°, 18°, ..., 162°, 180°), with 10 sequences corresponding to each of the 11 views. Six of the ten sequences (nm01-nm06) were captured in natural light, two were captured inside coats, and two were captured using bags. NM01-NM04 are used as a reference set, while NM05-NM06, BG01-BG02, and CL01-CL02 serve as probe sets during testing. The dataset satisfies the training for multi-view gait picture sequence peer group by providing samples under varying view, garment, and carrying circumstances.

This is a big view-variation gait dataset called OUMVLP [34]. More than 10,000 people are represented in this dataset. Fourteen angles (0, 15, 30, 45, 60, 75, 90, 180, 195, 210, 215, 240, 255, and 270) are used to record each subject's sequences. Each perspective has two sequences, just like OULP. With the help of our proposed gait sequence creation approach, we may increase the sum of arrangements for each subject in the dataset. Figure 2 shows a few examples.

Experimentation is conducted on a PC with an Intel(R) Core (TM) i7-7700K CPU, 8 GB of RAM, and an NVIDIA GeForce 1050-ti graphics processing unit. The data is then averaged among the 11 perspectives, with duplicates removed. The accuracy of the 18° probe, for instance, is determined by averaging the results of 10 gallery views other than the 18° gallery. Not only does gait data include information in the walking direction, but it also provides data perpendicular to the walking direction. The parallel view angle (90 degrees) and the vertical view angles (zero degrees and one hundred eighty degrees) can cause some of the gait information to be lost. The results of these experiments are shown in Table 1, Table 2 and Table 3 for the conditions of everyday walking, bag carrying, and various outfits, respectively.

Method | 0 | 18 | 36 | 54 | 72 | 90 | 108 | 126 | 144 | 162 | 180 | meanSTD |

CNN | 93.1 | 92.6 | 90.8 | 92.4 | 87.6 | 95.1 | 94.1 | 94.2 | 92.4 | 90.2 | 90.3 | 92.32.4 |

MGAN | 74 | 84.7 | 89.9 | 86.3 | 79.4 | 77.1 | 83.5 | 86.2 | 80.2 | 81.2 | 69.4 | 81.56.1 |

DiGGAN | 89 | 96.4 | 99.5 | 96.7 | 91.4 | 88.6 | 94.5 | 97.8 | 94.6 | 93.02 | 84.2 | 93.54.9 |

Proposed | 91 | 98.3 | 99.4 | 98.1 | 93.2 | 91.9 | 95.3 | 98.7 | 95.6 | 95.2 | 92.1 | 95.33.8 |

Table 1 shows that the proposed technique still performs well. The average improvement for the 90 degree, 0 degree, and 180 degree viewing angles over the current algorithms was 1.8%, 2.4%, and 2.7%, respectively. Meanwhile, after 11 views, it got better by 0.8%. Small sample training (ST) using only 24 items across three different walking circumstances has been upgraded with the proposed strategy (NM, BG, CL). The suggested model outperforms the state-of-the-art by 2.8%, 4.4%, and 0.7%, respectively, when compared to CNN, MGAN, and DiGGAN. The reason for this is that our model obtains more silhouettes than based on gait templates in a single batch since it treats the input as a set. Therefore, more time and space data are available to this model. After that, an improved training model is attained by using metric learning to acquire more discriminative gait features than the DiGGAN.

Recognizing someone's gait when their appearance has changed is difficult. In the BG sequence on CASIA-B, the approach performs admirably. As can be shown in Table 2, this strategy has proven to be highly effective in BG subsequence. It outperforms state-of-the-art algorithms, topping the competition across 11 different perspectives with an average accuracy that is 4.4%, 1.3%, and 1.5% higher than that of competing models. All the major gait variants are accounted for, further demonstrating the high invariance of gait features. The cross-view criteria are also considered by certain existing methods. This model is also the most accurate when the training set is large and data interference is high. Importantly, this is a large performance gap between BG and NM in all techniques except for the proposed method, which further proves that our gait features have robust invariance to all chief gait alterations.

Method | 0 | 18 | 36 | 54 | 72 | 90 | 108 | 126 | 144 | 162 | 180 | meanSTD |

CNN | 48.5 | 58.5 | 59.7 | 58 | 53.7 | 49.8 | 54 | 61.3 | 59.5 | 55.9 | 43.1 | 54.7 ± 5.6 |

MGAN | 64.7 | 73.8 | 80.1 | 75.6 | 67.9 | 64.9 | 71.0 | 77.8 | 79.4 | 77.0 | 66.6 | 72.6 ± 5.9 |

DiGGAN | 81.6 | 91.7 | 91.6 | 89.1 | 82.1 | 80.00 | 82.9 | 90.8 | 92.7 | 91.6 | 77.9 | 86.5 ± 5.6 |

Proposed | 86.0 | 93.3 | 95.1 | 92.1 | 88.0 | 82.3 | 87.0 | 94.2 | 95.9 | 90.7 | 82.4 | 89.7 ± 4.9 |

Method | 0 | 18 | 36 | 54 | 72 | 90 | 108 | 126 | 144 | 162 | 180 | meanSTD |

CNN | 37.7 | 57.2 | 66.6 | 61.1 | 55.2 | 54.6 | 55.2 | 59.1 | 58.9 | 48.8 | 39.4 | 54.0 ± 8.9 |

MGAN | 55.0 | 62.4 | 66.0 | 61.1 | 55.9 | 54.4 | 59.4 | 60.8 | 62.8 | 52.7 | 44.6 | 57.7 ± 6.0 |

DiGGAN | 57.2 | 76.1 | 80.9 | 77.2 | 72.3 | 70.2 | 73.0 | 72.6 | 75.0 | 69.6 | 50.9 | 70.4 ± 8.8 |

Proposed | 65.8 | 80.7 | 82.5 | 81.1 | 72.7 | 71.5 | 74.3 | 74.6 | 78.7 | 75.8 | 64.4 | 74.7 ± 5.9 |

Results further demonstrate the great value added by recurrently learnt partial gait illustration baseline methods employing labelled representations and unlabelled representations. This model's superiority is most obvious under the most difficult alterations in outward appearance, such as walking while dressed differently (Table 2) or toting around heavy goods (Table 3). As a result of the GAN module's ability to acquire more stable representations in the face of external perturbations, the model is more resistant to changes in appearance. Tabulated in Table 4 below are the results for another data set in terms of these angular dimensions:

Method | 0 | 30 | 60 | 90 | meanSTD |

CNN | 77.7 | 86.9 | 85.3 | 83.5 | 83.4 ± 4.1 |

MGAN | 68.9 | 82.3 | 82.1 | 81.7 | 78.8 ± 6.6 |

DiGGAN | 74.7 | 84.4 | 83.7 | 82.2 | 81.3 ± 4.5 |

Proposed | 78.5 | 87.5 | 85.8 | 85.4 | 84.3 ± 4.0 |

Gait data recorded at 0 degrees, 90 degrees, and 180 degrees show that the proposed model performs similarly to the aforementioned benchmarking approaches. This is because information about the gait, such as the walker's stride, is obscured at zero and 180 degrees, when the camera lens is perpendicular to the walker's line of motion. As an added complication, when the camera lens is at a right angle to the direction in which the subject is walking, it may miss important details about the gait, such as the subject's body swing, which would otherwise be apparent. But the results demonstrate that the proposed method outperforms the benchmarking methods in majority of these challenging scenarios, suggesting that it is able to learn more discriminative features for extreme viewing angle changes. Gait data acquired at intermediate angles, such as 36 degrees, 54 degrees, 126 degrees, and 144 degrees, where postures are evident, also show outstanding results when using this method. An additional intriguing finding is that the suggested model is robust in the face of varying subject and training sample sizes, as well as imaging configurations. The CASIA-B and OU-MVLP datasets contain 259,013 and 13,680 gait sequences from 125 and 10,307 individuals, respectively, but were acquired using different methods. Despite this, the suggested model routinely outperforms the benchmarking methods on both datasets. At the end, it's important to note that the suggested approach, MGAN. The extracted gait representations can then be more easily classified, retrieved, and transmitted.

5. Conclusion

This work explores the issue of performance degradation under environment dynamics in gait-based recognition systems trained on data from a small number of static environments. To address this issue, the authors of this study proposed a model that makes use of multimodal GAN and the marginal loss generator to make the scheme more resistant to the impact of a wide variety of unforeseen dynamic changes in its surrounding environment. This new approach is created to learn robust view-invariant characteristics of a person's stride. The approach has been rigorously evaluated across four distinct test protocols on the massive CASIA-B and OU-MVLP gait datasets. The obtained recognition results demonstrated the model's superiority over other state-of-the-art techniques. For normal walk, the proposed model achieved nearly 91.9%, the existing DiGGAN model achieved 88.6%. These same models achieved 82.3% and 80% for carried bags. There are still issues with gait identification that need to be fixed, such as gait occlusion. Therefore, moving forward, research will centre on addressing these issues and expanding gait recognition's use cases to include areas like Person Re-Identification and Behavior Acknowledgement.

The data used to support the findings of this study are available from the corresponding author upon request.

The authors declare no conflict of interest.